Latest Blog Posts

You can access my five most recent posts using the links above.

Looking for a different post?

From March 2010 through February 2018 I blogged at https://edtechfrontier.com.

Starting February 2022 I’ve been blogging here on this site.

For earlier posts dating back to February 2022 scroll down or use Search:

The Tragedy of AI (and what to do about it)

Image adapted from Tragedy and Comedy by Tim Green CC BY

A tragedy is characterized by four things:

A heroic noble protagonist

Fatal flaw(s)

An inevitable downfall

Catharsis

Heroic Noble AI image adapated fom Super Heros by 5chw4r7z CC BY

Fatal Flaws image adapted from They Always Focus On Our Flaws by Theilr CC BY-SA

Inevitable Downfall image adapted from The Downfall Of Machismo by Tman CC BY-NC-SA

Catharsis image adapted from Catharsis by Jason Jacobs CC BY

I’m doing a presentation titled “The Tragedy of AI (and what to do about it)” Monday June 23, 2025 at the Association of Learning Technology (ALT) Open Education Conference (OER25) in London England. The theme of this event is “Speaking Truth to Power: Open Education and AI in the Age of Populism”.

To complement and accompany my presentation I’ve written this essay blog post in two parts.

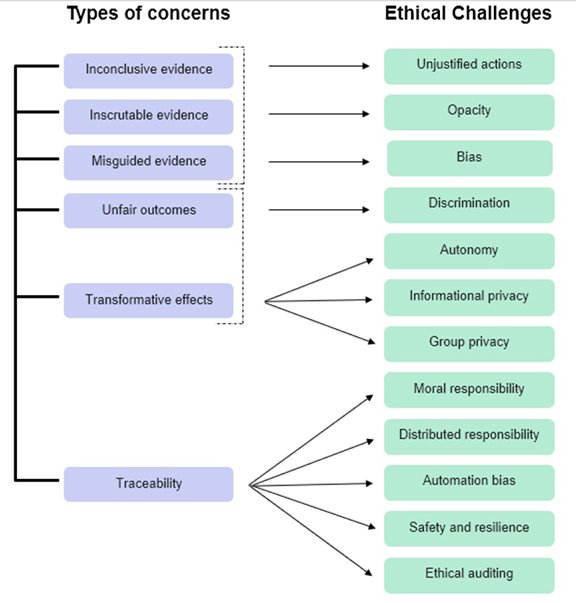

Part one, “The Tragedy of AI” establishes why AI is a tragedy. It reveals fatal flaws of AI. It shows how these fatal flaws risk leading to tragic inevitable downfall. This Tragedy of AI essay specifically focuses on the negative impact AI is having on the social agreements and fundamental principles that underlie open education, open science, and other open knowledge practices.

Part two, “Catharsis” explores the “(and what to do about it)” part of the Tragedy of AI. It puts forward options for taking action to address or prevent the tragedy of AI. It defines actions in ways that fit with open and deal with the fatal flaws of AI in ways that mitigate downfall.

PART ONE - The Tragedy of AI

AI as Heroic Noble Protagonist

Much has been written about the exceptional capabilities of AI. The OER25 conference program is chock a block full of such sessions. This post doesn’t delve into that, enough is being said.

The focus of this post is instead on the other three characteristics of a tragedy - fatal flaws, inevitable downfall, and catharsis.

The Fatal Flaws of AI

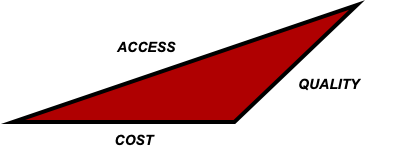

There are five fatal flaws of AI:

Tragedy of the Capitalism over the Commons

Taking without consent

Demotivating sharing

Breaking reciprocity, and

Bleeding open dry

Lets dig in to each of them.

AI Fatal Flaw #1 - Tragedy of the Capitalism over the Commons

Everyone knows, as Darcy Norman puts it,

“GenAI tools, based on Large Language Models (LLMs), are built by extracting content from across the internet—often without clear consent or compensation. This extraction-based origin demands caution.”

I put it a bit more forcefully. AI as a robbing, a plunder, a pillaging of a public commons - all of the Internet. In creating their Large Language Models developers of AI have helped themselves to all the content you and I have on the Internet. AI as an appropriation of a commons with little to no regard for the commons itself or the social norms associated with it.

Robin Sloan in his essay “Is it okay?” says:

“How do you make a language model? Goes like this: erect a trellis of code, then allow the real program to grow, its development guided by a grueling training process, fueled by reams of text, mostly scraped from the internet. Now. I want to take a moment to think together about a question with no remaining practical importance, but persistent moral urgency:

Is that okay?

The question doesn’t have any practical importance because the AI companies — and not only the companies, but the enthusiasts, all over the world — are going to keep doing what they’re doing, no matter what.

The question does still have moral urgency because, at its heart, it’s a question about the things people all share together: the hows and the whys of humanity’s common inheritance. There’s hardly anything bigger.”

In 1968 Joseph Hardin wrote the “Tragedy of the Commons”. In it he asks us to:

“Picture a pasture open to all. It is to be expected that each herdsman will try to keep as many cattle as possible on the commons. Such an arrangement may work reasonably satisfactorily for centuries because tribal wars, poaching, and disease keep the numbers of both man and beast well below the carrying capacity of the land.”

But what happens when the capacity of the land is met? Hardin says;

“The rational herdsman concludes that the only sensible course for him to pursue is to add another animal to his herd. And another; and another.... But this is the conclusion reached by each and every rational herdsman sharing a commons. Therein is the tragedy. Each man is locked into a system that compels him to increase his herd without limit-in a world that is limited.”

The effects of this self-interest over group interest Hardin suggests are overgrazing and a destruction of the commons to the detriment of all.

Louis Villa in his "My commons roller coaster" says:

"Whether or not the web was a “commons” is complicated, so I don’t want to push that label too hard. (As just one example, there was not much boundary setting—one of Ostrom’s signals of a traditional common pool resource.) But whether or not it was, strictly speaking, a “commons”, it was inarguably the greatest repository of knowledge the world had ever seen. Among other reasons, this was in large part because the combination of fair use and technical accessibility had rendered it searchable. That accessibility enabled a lot of good things too—everything from language frequency analysis to the Wayback Machine, one of the great archives of human history.

But in any case it’s clear that those labels, if they ever applied, very much merit the past tense. Search is broken; paywalls are rising; and our collective ability to learn from this is declining. It’s a little much to say that this paper is like satellite photos of the Amazon burning... but it really does feel like a norm, and a resource, are being destroyed very quickly, and right before our eyes."

Image adapted from of Global Carbon Monoxide by NASA CC BY

AI is a tragedy based on AI developers assuming everything on the web is there for the taking, acting autonomously, out of pure self-interest without interaction or consideration of the commons or the people that make that commons. In doing so AI negatively impacts practices of sharing and openness risking the destruction of the very commons on which it relies.

Anna Tumadóttir in her article “Reciprocity in the Age of AI” says:

“What we ultimately want, and what we believe we need, is a commons that is strong, resilient, growing, useful (to machines and to humans)—all the good things, frankly. But as our open infrastructures mature they become increasingly taken for granted, and the feeling that “this is for all of us” is replaced with “everyone is entitled to this”. While this sounds the same, it really isn’t. Because with entitlement comes misuse, the social contract breaks, reciprocation evaporates, and ultimately the magic weakens.

Hardin’s Tragedy of the Commons intuitively resonates with us. Air pollution, over grazing, deforestation, climate change and other global challenges align with his notion that people always act out of selfish self interest. But the tragedy of the commons is a concept, a theory, and as Elinor Ostrom’s Nobel prize winning work showed, it is not how a commons works in practice.

The reality is that AI is not a Tragedy of the Commons. It is a Tragedy of Capitalism. It is a tragedy of self-interest and the belief in competition over cooperation. It is a tragedy of the relentless pursuit of growth and profit no matter what the cost.

In part two “Catharsis” I’ll describe some of the ways Ostrom discovered people mitigate the potential for a tragedy of the commons and explore how we can apply these ideas to AI and our open work.

For those of you interested in exploring more about the differences between how a commons works and how capitalism works I highly recommend these resources:

The Serviceberry - Abundance and Reciprocity in the Natural World by Robin Wall Kimmerer. This beautiful book published in 2024 is an eloquent exploration of the issue based on lessons from the natural world.

The Gift - How the Creative Spirit Transforms the World by Lewis Hyde. This book explores old gift-giving cultures and their relation to commodity societies.

Doughnut Economics - Seven Ways to Think Like a 21st Century Economist by Kate Raworth. Doughnut economics, is a visual framework for sustainable development. The framework is shaped like a doughnut, hence its name. It explores the creation of an alternate economy that meets the needs of people without overshooting Earth's ecological ceiling re-framing economic problems in the process.

Made With Creative Commons by Paul Stacey and Sarah Hinchliff Pearson. While working at Creative Commons I co-wrote this book with Sarah. It is guide to sharing your knowledge and creativity with the world, and sustaining your operation while you do.

AI Fatal Flaw #2 - Taking Without Consent

As Daniel Campos notes,

AI is constructed non-consensually on the back of copyrighted material

This is one of the greatest stains on this technology, period.

People’s work was taken, without consent, and it is now being used to generate profit for parties visible and not.

Thing is, this is unsustainable for all parties. The technology requires ongoing training to remain relevant. Everyone who is part of its value chain, in the long term, must be represented in both its profits and the general framework for participation.

AI developers did not seek permission. They mined any works they wanted. No care was taken as to whether the works are under copyright or have an open license. Everything on the Internet, content of all kinds, posts, books, journal articles, music, … it didn’t matter, AI developers view all such resources as legitimate sources of data for its models. The more data mined the better the AI model.

As Johana Bhuiyan notes in “Companies building AI-powered tech are using your posts” reports:

“"Even if you haven’t opted in to letting them use your data to train their AI, some companies have opted you in by default. Figuring out how to stop them from hoovering up your data to train their AI isn’t exactly intuitive. Left to their own devices, more than 300,000 Instagram users have posted a message on their stories in recent days stating they do not give Meta permission to use any of their personal information to inform their AI. To be clear, just like the Facebook statuses of yore, a simple Instagram post will not do anything to stop Meta from using your data in this way.

This is not a new practice as Bhuiyan notes:

“Default opt-ins are an industry-wide issue. A recent report by the Federal Trade Commission (FTC) on the data practices of nine social media and streaming platforms including WhatsApp, Facebook, YouTube and Amazon found that nearly all of them fed people’s personal information into automated systems with no comprehensive or transparent way for users to opt out.

Overall, there was a lack of access, choice, control, transparency, explainability and interpretability relating to the companies’ use of automated systems,” the FTC report reads.”

AI developers are facing lawsuits over their use of content without consent. Creators are advocating for consent, credit and compensation. AI developers seem to be speaking out of two sides of their mouths on this issue. On the one side they are claiming their use of content is allowed under copyright fair use (in the US) or text and data mining rules. On the other they are simultaneously pursuing licensing deals with major media rights holders.

Consent to use is not just about data scraped from the web. It’s also about the data associated with your use of the AI itself. Sadly the business models of AI are further entrenching have’s vs have nots. If you are using a free version of an AI application your prompts and outputs are, by default, being used to train the AI. If you are using paid versions of an AI application your prompts and outputs are not being used to train the AI. For example Google’s Gemini AI terms of use says this:

“When you use Paid Services, including, for example, the paid quota of the Gemini API, Google doesn't use your prompts (including associated system instructions, cached content, and files such as images, videos, or documents) or responses to improve our products.”

“When you use Unpaid Services, including, for example, Google AI Studio and the unpaid quota on Gemini API, Google uses the content you submit to the Services and any generated responses to provide, improve, and develop Google products and services and machine learning technologies, including Google's enterprise features, products, and services.”

So if you can afford to pay to use AI your prompts and outputs aren’t used to train the AI. If you are less well off and can’t afford to pay then you pay by agreeing to have your data used.

AI Fatal Flaw #3 - Demotivating Sharing

Sharing is central to open practices whether that be open education, open science, open data, open culture or any of the many other forms of open. Creative Commons licenses have been a key means by which sharing of content has been enabled.

However, as Anna Tumadóttir, CEO of Creative Commons, says in her article "Reciprocity in the Age of AI":

“Since the inception of CC (Creative Commons), there have been two sides to the licenses. There’s the legal side, which describes in explicit and legally sound terms, what rights are granted for a particular item. But, equally there’s the social side, which is communicated when someone applies the CC icons. The icon acts as identification, a badge, a symbol that we are in this together, and that’s why we are sharing. Whether it’s scientific research, educational materials, or poetry, when it’s marked with a CC license it’s also accompanied by a social agreement which is anchored in reciprocity. This is for all of us.

But, with the mainstream emergence of generative AI, that social agreement has come into question and come under threat, with knock-on consequences for the greater commons. Current approaches to building commercial foundation models lack reciprocity. No one shares photos of ptarmigans to get rich, no one contributes to articles about Huldufólk seeking fame. It is about sharing knowledge. But when that shared knowledge is opaquely ingested, credit is not given, and the crawlers ramp up server activity (and fees) to the degree where the human experience is degraded, folks are demotivated to continue contributing.”

Sadly AI demotivates sharing.

Image adapted from Sharing With Others by Frank Homp CC BY

AI Fatal Flaw #4 - Breaking Reciprocity

At this point AI is a permissionless, acquisition and assertion of the right to use the works of others. It is a utilization of others works for profit without reciprocity. By breaking with the reciprocal social exchange principle that involves the giving and receiving of benefits or actions between individuals AI engages in negative reciprocity gaining more out of the exchange than the public and naturally people feel wronged.

Image adapted from Reciprocity by Keith Solomon CC BY-NC-SA

AI developers do not seek permission. They mine any works they want. They don’t care whether the works used are under copyright or have an open license. Everything on the Internet, books, journal articles, music, … it doesn’t matter, all such resources are considered legitimate sources of data for its models.

As Frank Pasquale and Haochen Sun note,

“Most AI firms are not compensating creative workers for composing the songs, drawing the images, and writing both the fiction and nonfiction books that their models need in order to function. AI thus threatens not only to undermine the livelihoods of authors, artists, and other creatives, but also to destabilize the very knowledge ecosystem it relies on.”

They go on to note that legislators agree,

“The situation strikes many policymakers as deeply unfair and undesirable. As the Communications and Digital Committee of the United Kingdom’s House of Lords has concluded, “[w]e do not believe it is fair for tech firms to use rightsholder data for commercial purposes without permission or compensation, and to gain vast financial rewards in the process.”

And this is not just an issue for proprietary works. Openly licensed and peer reviewed sources of data are considered high quality and AI prizes such data sources. But AI violates and degrades the integrity of open sharing by taking without giving. As Stephanie Decker notes in The Open Access – AI Conundrum: Does Free to Read Mean Free to Train?,

"The central issue remains that commercial AI companies extract significant economic value from OA content without necessarily returning value to the academic ecosystem that produced it, while at the same time disrupting academic incentive structures and attribution mechanisms."

Creative Commons CEO Anna Tumadóttir says,

“Reciprocity in the age of AI means fostering a mutually beneficial relationship between creators/data stewards and AI model builders. For AI model builders who disproportionately benefit from the commons, reciprocity is a way of giving back to the commons that is community and context specific.

(And in case it wasn’t already clear, this piece isn’t about policy or laws, but about centering people).

This is where our values need to enter the equation: we cannot sit neutrally by and allow “this is for everyone” to mean that grossly disproportionate benefits of the commons accrue to the few. That our shared knowledge pools get siphoned off and kept from us.

We believe reciprocity must be embedded in the AI ecosystem in order to uphold the social contract behind sharing. If you benefit from the commons, and (critically) if you are in a position to give back to the commons, you should. Because the commons are for everyone, which means we all need to uphold the value of the commons by contributing in whatever way is appropriate.

There never has been, nor should there be, a mandatory 1:1 exchange between each individual and the commons. What’s appropriate then, as a way to give back?”

We’ll explore answers to this question in the second part of this essay “Catharsis”.

AI Fatal Flaw #5 - Bleeding Open Dry

Molly White in her post “Wait, not like that”: Free and open access in the age of generative AI” says,

“The real threat isn’t AI using open knowledge — it’s AI companies killing the projects that make knowledge free. The true threat from AI is that they will stifle open knowledge repositories benefiting from the labor, money, and care that goes into supporting them while also bleeding them dry. It’s that trillion dollar companies become the sole arbiters of access to knowledge after subsuming the painstaking work of those who made knowledge free to all, killing those projects in the process.”

AI does not acknowledge, care for, or contribute to the stewardship of the commons on which it relies.

Bleeding Open Dry image adapted from Pavement Drain With Patched Cracks photo by Paul Stacey CC BY-SA

Molly goes on to say:

“Anyone at an AI company who stops to think for half a second should be able to recognize they have a vampiric relationship with the commons. While they rely on these repositories for their sustenance, their adversarial and disrespectful relationships with creators reduce the incentives for anyone to make their work publicly available going forward (freely licensed or otherwise). They drain resources from maintainers of those common repositories often without any compensation. They reduce the visibility of the original sources, leaving people unaware that they can or should contribute towards maintaining such valuable projects. AI companies should want a thriving open access ecosystem, ensuring that the models they trained on Wikipedia in 2020 can be continually expanded and updated. Even if AI companies don’t care about the benefit to the common good, it shouldn’t be hard for them to understand that by bleeding these projects dry, they are destroying their own food supply.

And yet many AI companies seem to give very little thought to this, seemingly looking only at the months in front of them rather than operating on years-long timescales.”

Vampire by Alvaro Tapia licensed CC BY-NC-ND

AI is vampiric sucking the lifeblood out of the corpus of all human knowledge and selling it back to us as a monthly subscription.

Image adapted from Hacker Memes based on original Twonks comic by Steve Nelson

The Inevitable Downfall

The fatal flaws of AI lead to an inevitable downfall. The very things AI relies on:

The Commons

Consent and willing participation

Sharing of high quality, thriving, evolving data

Reciprocity

Openness

are its fatal flaws.

Tragedy of Capitalism over the Commons

Taking without consent

Demotivating sharing

Breaking reciprocity, and

Bleeding open dry

The fatal flaws not only have the potential for an inevitable downfall of AI but the downfall of open education, open science and other open practices too.

Every time I use AI I feel like I am aiding and abetting this tragedy and downfall. In my use I condone its taking.

The current issues around ChatGPT and Studio Ghibli describe it well. Shanti Escalante-De Mattei says in her article “The ChatGPT Studio Ghibli Trend Weaponizes “Harmlessness” to Manufacture Consent”:

“Every time a user transforms a selfie, family photo or cat pic into a Ghibli-esque image, they normalize the ability of AI to steal aesthetics, associations, and affinities that artists spend a lifetime building. Participatory propaganda doesn’t require users to understand the philosophical debates about artists’ rights, or legal ones around copyright and consent. Simply by participating they help Altman and his competitors win the battle of public opinion. For many, the Ghibli images will be their first contact with generative AI. For more informed users, the flood of images reinforces the inevitability that AI will re-shape our world. It’s particularly cruel that Miyazaki’s style has become the AI vanguard, given that he famously stuck to laborious hand-drawn animation even as the industry shifted to computer-generated animation.”

She concludes:

"It is laborious, loving, useful work that imparts self-knowledge and the understanding of shared struggle and humanity. You cannot skip to the end. You cannot just generate the artwork or the essay and get the learning and the satisfaction that the work imparts. When you forgo that labor, you let fascists, corporations, and all manner of elite actors make the act of expressing yourself so easy that you feel free, even agentive, as they take your rights from you."

I have used AI. But this is not the kind of relationship I want. I’m not a fan of the enshitification of the Internet and much of AI is following the enshitification model flooding us with slop (deepfakes, misinformation, personality appropriation, bias, … - need I go on?) and exploitive business models.

Oh sure there have been publications about the ethics of AI and attempts to regulate (at least in the EU) but to date there has been no effective repercussion to the tragedy of AI. There is no change in behaviour no adjustment of course. If anything, there is a race to commercialization, economic growth, and global dominance - regulations, guardrails, social agreements be damned.

AI’s trampling of the commons puts it at odds with open.

Should our response simply be one of acceptance?

Everybody Knows but nobody does anything?

Is there no course of action to address or prevent the tragedy of AI?

I think there are lots of emerging options.

I have hope.

So lets move on to “Catharsis”.

PART Two - Catharsis

In this Catharsis section we shift from the Tragedy of AI to the “(and what to do about it)” part. What are options for taking action to address or prevent the tragedy of AI? What actions can the open community take to deal with the fatal flaws of AI in ways that mitigate downfall?

Anna Tumadóttir, CEO of Creative Commons says:

“There never has been, nor should there be, a mandatory 1:1 exchange between each individual and the commons. What’s appropriate then, as a way to give back? So many possibilities come to mind, including:

Increasing agency as a means to achieve reciprocity by allowing data holders to signal their preferences for AI training

Credit, in the form of attribution, when possible

Open infrastructure support

Cooperative dataset development

Putting model weights or other components into the commons

Catharsis can take many forms.

Building on Anna’s list here are five catharsis actions I think the open community can pursue to resolve the fatal flaws of AI and avoid the tragedy:

Social Agreements & Regulations

Preference Signals

Reciprocity

Public AI

Collaborative open data set creation

Lets explore each of these.

AI Catharsis #1 - Social Agreements & Regulations

Samuel Moore in "Governing the Scholarly Commons" says:

"The question, then, is not whether AI is inherently good or bad but more concerning who controls it and with what motivation. If it is answerable to affected communities, ethicists and technological experts, AI may develop in a more productive way than if it is governed by the needs of shareholders and profit-seeking companies. The problem of AI governance – in the context of academic knowledge production – is the focus of this report."

He goes on to suggest:

"The governance of knowledge production is currently weighted heavily in favour of the market, which is to say that decisions to implement a technology or business model are determined by how profitable they are, how much labour they save, or how financially efficient they are. I am therefore interested in ways to keep the power of the market in check in the service of more responsible AI development."

Social Agreements and Regulations image adapted from Agreement by Takashi Toyooka CC BY-NC

Moore's report looks at a range of different strategies for governing the implementation of AI as a scholarly commons. Options for good governance of AI in academic knowledge production include:

Commons-based approaches

Academic governance

Academic "citizen" assemblies

These all have merit.

Commons-based approaches have a high fit with open education and open science.

Nobel prize winning Elinor Ostrom studied many different commons. She found that the tragedy of the commons is not inevitable, as Hardin thought. Instead, if commons participants decide to cooperate with one another, communicate, monitor each other’s use, and enforce rules for managing it, they can avoid the tragedy.

Open education and open science have been functioning in just such a cooperative manner but AI disrupts that practice.

It is in the interest of AI developers and the open community to work together in resolving the fatal flaws and avoid a shared tragedy.

What we need is dialogue, communication and relationship building between the open community and AI developers. There are lots of AI applications targeting education. Their development efforts are proceeding with little to no engagement with the open education community. Open education could step up and take an active leading role in seeking to communicate and influence how AI development for education takes place. But we can’t do that in isolation from the AI developers themselves.

We could for example, seek to work with efforts like Current AI who have a focus area specifically relating to Open supporting open standards and tools that make AI accessible and adaptable for everyone.

Alek Tarkowski in his article "Data Commons Can Save Open AI" says:

"We must do everything in our power to ensure that future datasets are built upon a data commons with stewardship and control."

His recently released report, “Data Governance in Open Source AI- Enabling Responsible and Systemic Access” argues that collective action is needed to release more data and improve data governance to balance open sharing with responsible release.

Consortia of open organizations could collaborate and propose solutions to the fatal flaws. Everyone acknowledges the need for AI guardrails. Clearly rules and agreements are needed. But these can come in many forms ranging from social norms, to policy, and regulations. How would the open community define these?

Over one hundred countries signed on to the "Statement on Inclusive and Sustainable Artificial Intelligence for People and the Planet" committing to:

"Initiate a Global Dialogue on AI governance and the Independent International Scientific Panel on AI and to align on-going governance efforts, ensuring complementarity and avoiding duplication."

How might the open community participate and provide input into such efforts?

Governments are responsible for regulations with the EU AI regulation being the prime example to date. Perhaps AI, developed with public funds, ought to be open to the public that paid for it in a similar manner as with open science and open education?

Over time the legality related to copyright lawsuits AI faces will emerge. But it will take a long time and it is highly politicized as highlighted in Copyright Office head fired after reporting AI training isn’t always fair use. Nevertheless it seems likely there will be new rules that seek to strike some kind of effective balance between the public interests, maintaining a thriving creative community and allowing technological innovation to flourish. It seems prudent that we make an effort to define what that looks like for open education and open science.

One option is for us to define norms and social agreements. We've seen some related action around efforts to define what "open source" means in the context of AI models. But to date there is no similar effort around defining what open means in the context of AI in open education and open science. There are lots of efforts around ethics in relation to AI but not so much around open.

In Governing the Commons, Elinor Ostrom summarized eight design principles associated with creating a sustainable commons:

1. Clearly defined boundaries

2. Congruence between appropriation and provision rules and local conditions

3. Collective-choice arrangements

4. Monitoring

5. Graduated sanctions

6. Conflict-resolution mechanisms

7. Minimal recognition of rights to organize

8. Nested enterprises

These design principles are worth considering as a framework for devising open norms and agreements related commons based approaches to AI.

But there are many more options.

Molly White in her article “Wait, not like that”: Free and open access in the age of generative AI, says,

“It would be very wise for these [AI] companies to immediately begin prioritizing the ongoing health of the commons, so that they do not wind up strangling their golden goose. It would also be very wise for the rest of us to not rely on AI companies to suddenly, miraculously come to their senses or develop a conscience en masse.

Instead, we must ensure that mechanisms are in place to force AI companies to engage with these repositories on their creators' terms.

There are ways to do it: models like Wikimedia Enterprise, which welcomes AI companies to use Wikimedia-hosted data, but requires them to do so using paid, high-volume pipes to ensure that they do not clog up the system for everyone else and to make them financially support the extra load they’re placing on the project’s infrastructure. Creative Commons is experimenting with the idea of “preference signals” — a non-copyright-based model by which to communicate to AI companies and other entities the terms on which they may or may not reuse CC licensed work. Everyday people need to be given the tools — both legal and technical — to enforce their own preferences around how their works are used.

Some might argue that if AI companies are already ignoring copyright and training on all-rights-reserved works, they'll simply ignore these mechanisms too. But there's a crucial difference: rather than relying on murky copyright claims or threatening to expand copyright in ways that would ultimately harm creators, we can establish clear legal frameworks around consent and compensation that build on existing labor and contract law. Just as unions have successfully negotiated terms of use, ethical engagement, and fair compensation in the past, collective bargaining can help establish enforceable agreements between AI companies, those freely licensing their works, and communities maintaining open knowledge repositories. These agreements would cover not just financial compensation for infrastructure costs, but also requirements around attribution, ethical use, and reinvestment in the commons.

The future of free and open access isn't about saying “wait, not like that” — it’s about saying "yes, like that, but under fair terms”. With fair compensation for infrastructure costs. With attribution and avenues by which new people can discover and give back to the underlying commons. With deep respect for the communities that make the commons — and the tools that build off them — possible. Only then can we truly build that world where every single human being can freely share in the sum of all knowledge.”

I think this entire list of suggestions is a good harbinger of what is to come. Of particular note is the shift from using copyright as the primary legal framework for open to one that uses labor and contract law to enact AI social agreements and regulations.

AI Catharsis #2 - Signal Preferences

I think signal preferences have a lot of potential.

Signal Preferences image adapted from Smoke Signals by Ashok Boghani CC BY-NC

As Molly noted, Creative Commons (CC) is experimenting with the idea of preference signals. In 2024 a Creative Commons Position Paper on Preference Signals was released that gives a good overview of what is being considered. This paper notes:

“What is new with generative AI is that the unanticipated uses are happening at scale. With concentrated power there is a risk of concentrated benefits and creators are questioning anew whether the bargain is worth it. The creative works are being used outside of their original context in a way that does not distribute any of the usual rewards back to the creator, either financial or reputational.”

One signal preferencing approach focuses on making it possible to signal opt-out vs opt-in. By default AI developers automatically opted us all in to their use of our data. This could be changed by regulation. But in the interim there is interest in a technical opt-out signalling preference that allows creators to prevent their content from being used for AI training.

The most promising solution seems to be Robots.txt. Audrey Hingle and Mallory Knodel note in their article "Robots.txt Is Having a Moment: Here's Why We Should Care" note:

“Robots.txt remains important as a foundational tool due to its widespread adoption and familiarity among website owners and developers. It provides a straightforward mechanism for declaring basic crawling permissions, offering a common starting point from which more advanced and specific solutions can evolve.”

However, they go on to note challenges:

“Robots.txt is primarily useful for website owners and publishers who control their own domains and can easily specify crawling rules. It doesn't effectively address content shared across multiple platforms or websites, nor does it give individual content creators, such as artists, musicians, writers, and other creative professionals, a way to easily communicate their consent preferences when they publish their work on third party sites, or when their work is used by others.”

Opt-in vs opt-out has largely focused on providing a way for websites and end users to opt out of having AI use their data for training. I’d love to see opt-in options that give users options for opting in to having their data used for AI that serves the public interest rather than for big tech for-profit shareholders.

Creative Commons is taking a different approach from opt-in vs opt-out. In their Position Paper on Preference Signals they say:

“CC’s approach is to reject the all-or-nothing framework and create options for sharing that reflect a more generous and collaborative spirit than default copyright. The CC licenses exist on a spectrum of permissiveness, all underpinned by the goal of enabling access to and sharing of knowledge and creativity as part of a global commons, built on mutual cooperation and shared values.”

They go on to note:

“We have uncovered many of the limitations of using instruments such as robots.txt as an indicator of opt-in or opt-out for generative AI training. In many cases, robots.txt and a website’s terms of service are inconsistent, and robots.txt is a limiting protocol when it comes to creator content in the commons as a public good (including, but not limited to art, culture, science, journalism, scientific data). Further, approaches that propagate the limiting binary of blunt instruments of opt-out do not take into consideration the values and social norms embedded in sharing content on the web. CC’s approach is to develop and advocate for tools that empower creators and contribute to a healthy and ethical commons for the public good.”

Creative Commons has made a lot of progress on this and on June 25, 2025 they are hosting a CC Signals Kickoff event. There invitation to this event says:

“We are building a standardized, global mechanism called CC signals that aims to increase the agency of those creating and stewarding the content that is relied upon for AI training. We invite you to join us as we officially kick off the CC signals project. During this kickoff event, you’ll hear from members of the CC team and community who will share an outline of the first public proposal of the CC signals framework. We will also provide resources that give background information and explore early thinking on legal and technical implementation of CC signals. Our goal is to set up members of the CC global community to engage with this proposal so that you can provide input and recommendations that will strengthen the initiative as we build toward pilot implementation later this year. This is a shared challenge, and a shared opportunity. Whether you're a funder, developer, policymaker, educator, platform operator, or creator, your participation matters. Join us.”

Anna CEO of Creative Commons says:

“Part of CC being louder about our values is also taking action in the form of a social protocol that is built on preference signals, a simple pact between those stewarding data and those reusing it for generative AI. Like CC licenses, they are aimed at well-meaning actors and designed to establish new social norms around sharing and access based on reciprocity. We’re actively working alongside values-aligned partners to pilot a framework that makes reciprocity actionable when shared knowledge is used to train generative AI.”

A key emphasis is that these preference signals are focused on reciprocity. I think we’ll see preferences address reciprocity in ways that involve direct or indirect financial subsidization, mutual exchange non-monetary contribution, and credit/attribution recognition.

One of the best things about existing CC licenses is their use of icons and human readable deeds. I expect these signal preferences to follow that successful format with icons that symbolize preferences and easy to read deeds that clearly specify the terms of the social agreement.

AI Catharsis #3 - Reciprocity

This is, perhaps, the single biggest fatal flaw of AI. I applaud CC’s efforts to address it.

Given the volume of data used to train AI models the value of any one creators data is likely very small. One precedent for thinking about this is music streaming where creators get a small fraction of a cent per stream. However, as seen by AI licensing deals, AI developers want to work more with large collection holders rather than individual creators. Reciprocity at the aggregate level rather than at the individual level.

Paul Keller in "AI, the Commons, and the limits of copyright" has a novel suggestion:

“We should look for a new social contract, such as the United Nations Global Digital Compact, to determine how to spend the surplus generated from the digital commons. A social contract would require any commercial deployment of generative AI systems trained on large amounts of publicly available content to pay a levy. The proceeds of such a levy system should then support the digital commons or contribute to other efforts that benefit humanity, for example, by paying into a global climate adaptation fund. Such a system would ensure that commercial actors who benefit disproportionately from access to the “sum of human knowledge in digital, scrapable form” can only do so under the condition that they also contribute back to the commons.”

Image adapted from Reciprocity by Keith Solomon CC BY-NC-SA

If AI is going to take and benefit from the commons it surely ought to contribute back to it? Do we, the open community, have ideas for how AI can contribute back to the commons?

The open community tends to openly license and share resources not with the expectation of personal financial gain but with the aim of enabling a commons that benefits all. What can AI developers contribute to a commons benefitting us all?

One consideration is credit and attribution. Creators of all types value reputation gain through acknowledgement and recognition. This is especially so in open where open science and open education communities use Creative Commons licenses that all mandate attribution. More broadly, citations and references are a core and essential practice in education and research.

By not providing credit and attribution AI breaks the reciprocal social norms of academia. This is one area where academia is well positioned to push back and advocate for fully referenced AI outputs. Credit, in the form of attribution, should be the default. At the very least the datasets on which the model is trained should be fully disclosed.

AI Catharsis #4 - Public AI

Another form reciprocity could take is support for open infrastructure that supports the commons more broadly. Open infrastructures are technologies, services, and resources provided and managed by non-commercial organizations. A big issue with AI is the complexity of the technology stack involved with developing and using AI applications. Reciprocity could entail creation of alternatives to for-profit AI in the form of open infrastructure for Public AI.

Open infrastructures are technologies, services, and resources provided and managed by non-commercial organisations. A big issue with AI is the complexity of the technology stack involved with developing and using AI applications. Open infrastructure can establish an alternative to For-profit AI in the form of Public AI.

In a recently published "Public AI - A Public Alternative to Private AI Dominance" blog post and paper Alek Tarkowski, Felix Sieker, Lea Gimpel, and Cailean Osborne write:

"Today’s most advanced AI systems and foundation models are largely proprietary and controlled by a small number of companies. There is a striking lack of viable public or open alternatives. This gap means that cutting-edge AI remains in the hands of a select few, with limited orientation toward the public interest, accountability or oversight.

Public AI is a vision of AI systems that are meaningful alternatives to the status quo. In order to serve the public interest, they are developed under transparent governance, with public accountability, equitable access to core components (such as data and models), and a clear focus on public-purpose functions."

Public AI serves the public interest. This seems common ground with open education and open science.

Public AI image adapted from What Is For the Public Good? photo by Paul Stacey CC BY-SA

The report goes on to say:

“A vision for public AI needs to take into account today’s constraints at the compute, data and model layers of the AI stack, and offer actionable steps to overcome these limitations. This white paper offers a clear overview of AI systems and infrastructures conceptualized as a stack of interdependent elements, with compute, data and models as its core layers.

It also identifies critical bottlenecks and dependencies in today’s AI ecosystem, where dependency on dominant or even monopolistic commercial solutions constrains development of public alternatives. It highlights the need for policy approaches that can orchestrate resources and various actors across layers, rather than attempting complete vertical integration of a publicly owned solution.

To achieve this, it proposes three core policy recommendations:

Develop and/or strengthen fully open source models and the broader open source ecosystem

Provide public compute infrastructure to support the development and use of open models

Scale investments in AI capabilities to ensure that sufficient talent is developing and adopting these models”

All these ideas seem pertinent to our open work. I also think Public AI gives users an opt-in option alternative to big tech. We should be thinking about how to have our work be Digital Public Goods.

Reciprocity remains an AI fatal flaw but there are options. How might all of us working in open education and open science pursue and shape these options?

AI Catharsis #5 - Collaborative open data set creation

One of the ways the open community can take action is around collaborative open data set creations as a form of Digital Public Goods.

Sunrise in Dew Drops on Spider Web by Matthew Paulson CC BY-NC-ND

A fascinating example is described in “Common Corpus: building AI as Commons” where Alek Tarkowski and Alicja Peszkowska share a profile of Pierre-Carl Langlais, a digital humanities researcher, Wikipedian, and a passionate advocate for open science. Pierre is also the co-founder of a French AI startup, Pleias, and the coordinator of Common Corpus, a public domain dataset for training LLMs.

They note:

“As the largest training data set for language models based on open content to date, Common Corpus is built with open data, including administrative data as well as cultural and open-science resources – like CC-licensed YouTube videos, 21 million digitized newspapers, and millions of books, among others. With 180 billion words, it is currently the largest English-speaking data set, but it is also multilingual and leads in terms of open data sets in French (110 billion words), German (30 billion words), Spanish, Dutch, and Italian.

Developing Common Corpus was an international effort involving a spectrum of stakeholders from the French Ministry of Culture to digital heritage researchers and open science LLM community, including companies such as HuggingFace, Occiglot, Eleuther, and Nomic AI. The collaborative effort behind building the data set reflects a vision of fostering a culture of openness and accessibility in AI research. Releasing Common Corpus is an attempt at democratizing access to large, quality data sets, which can be used for LLM training.”

Openly licensed, peer-reviewed data is generally considered more valuable by AI developers and researchers due to its accessibility, transparency, and potential for reduced bias. It has been fascinating to watch Wikimedia devise its strategy for AI. They state:

“As the internet continues to change and the use of AI increases, we expect that the knowledge ecosystem will become increasingly polluted with low-quality content, misinformation, and disinformation. We hope that people will continue to care about high quality, verifiable knowledge and they will continue to seek the truth, and we are betting that they will want to rely on real people to be the arbiters of knowledge. Made by human volunteers, we believe that Wikipedia can be that backbone of truth that people will want to turn to, either on the Wikimedia projects or through third party reuse.”

The popularity of Wikipedia as a data source resulted in such an increase in traffic from AI data scrapers that performance was being jeopardized for regular users. This led them to create a set of enterprise grade APIs in Wikimedia Enterprise that provide access to datasets of Wikipedia and sister projects while being supported by robust contracts, expert services, and unwavering support. Wikimedia Enterprise is a paid service targeted at commercial users of Wikimedia. Christie Rixford notes:

“At first glance, this initiative seems to be mainly about securing new revenue sources and thus improving sustainability of this civic platform. But in reality it is a milestone in developing Wikimedia as an access to knowledge infrastructure, and a strategy for adjusting to ongoing changes in the online ecosystem.”

They go on to say:

“The case of the Enterprise API is fascinating, as it shifts focus from the most visible part of the Wikimedia project: the production of encyclopaedic content by the community of volunteers. Instead, it focuses on code and infrastructure as tools for increasing access to knowledge. In doing so, it shows the limits of enabling reuse solely through legal means.”

I think there are opportunities to create not just Large Language Models but smaller more customized openly licensed data sets for various academic domains using open science and open education sources. Higher education institutions or national systems could embark on the creation of specialized AI that starts with an existing open data set to which are added institution faculty endorsed open data sources and even student created open data, toward providing a unique and localized AI experience.

At the OEGlobal 2024 conference in Melbourne last year Martin Dougiamas proposed an OER Dataset for AI project.

Another good example is the Open Datasets Initiative which aims to curate high-fidelity AI ready open datasets in biology and the life sciences. You can imagine something similar for climate action and other specific areas such as the United Nations SDGs or other similar targeted goals.

Conclusion: Tragedy Foretold? or Tragedy Avoided?

I have argued that to date AI is a tragedy caused by five fatal flaws:

Tragedy of Capitalism over the Commons

Taking without consent

Demotivating sharing

Breaking reciprocity, and

Bleeding open dry

The fatal flaws conflict with the very things AI relies on risking an inevitable downfall of AI and associated downfall of open education, open science and other open practices.

I have also argued that the fatal flaws can be changed and suggested five ways the open community can take action to do so:

Social Agreements & Regulations

Preference Signals

Reciprocity

Public AI

Collaborative open data set creation

There are other AI flaws and options for action but these, I think, are the key ones. The extent to which AI really does end up being a tragedy or, alternatively, an important and thriving addition to open knowledge creation depends on our response to it.

I hope this post helps make the fatal flaws of AI visible and inspires action to resolve them in ways that contribute to a flourishing commons.

In the words of Anna Tumadóttir:

“When we talk about defending the commons, it involves sustaining them, growing them, and making sure that the social contract remains intact for future generations of humans. And for that to happen, it’s time for some reciprocity.”

Reimagining Open At The Crossroads

I’ve been deeply engaged in a couple of open education projects. In Europe I’m helping SPARC Europe with their Connecting the Worlds of Open Science and Open Education effort. In North America I’m helping the Open Education Network with a project to increase educational equity in higher education by developing models and guidance to help academic libraries formalize programs that support open education work at their institutions. Both projects are fascinating. I’m enjoying the teams involved and opportunities to dive deeply into these topics. I’m learning lots.

I’ve been quiet here with my blog. After last years intensive exploration of AI I took a pause. Over the course of my career I’ve been through many waves of technology aiming to enhance and disrupt education in positive ways. That trail is a long one with few successes and lots of failures well documented by Audrey Watters. AI feels like yet another over hyped technology that, so far at least, is over promising and under-delivering. AI seems not to have learned anything from the education technologies that came before it. I have little interest in furthering the AI hype cycle. However, I remain quietly interested in how AI is playing out in education, particularly open education.

The blog post I wrote on AI From An Open Perspective generated a lot of interest. But, recently I’ve found myself shifting to AI From a Commons Perspective. As I see it AI has appropriated a data commons with little to no regard for the commons or the norms associated with it. The sheer scale and blatant disregard is callous and yet another example of voracious capitalism exploiting a commons for profit. Not a good feeling if you work in the commons, your work is part of a commons, or you simply believe in the commons.

I was recently interviewed on the meaning of open artificial intelligence in education during which I suggested there is no “open” artificial intelligence in education. AI is not open. No matter what the companies say, or name themselves, the extent to which AI is “open” is limited. Even just what open means in the context of AI is being highly debated. (See here, here, here, here). I very much share the perspective of Luis Villa who both celebrates and mourns the data commons in his excellent My Commons Roller Coaster post. The lack of transparency and exploitive nature of current AI development is like a rotten apple at the bottom of the barrel. I find it hard to enjoy the fruit knowing what lies at the heart of it and the potential for full rot.

I’ve moved AI to the back burner - at least for now.

I moved Reimagining Open At The Crossroads to the front burner.

Garden Crossroads by Paul Stacey CC BY-SA

In March I attended the Association for Learning Technology (ALT) OER 2024 Conference in Cork Ireland. I found the keynote “The future isn’t what it used to be: Open education at a crossroads” delivered by Dr Catherine Cronin and Professor Laura Czerniewicz very thought provoking. The keynote was recorded and is available to watch on ALT’s YouTube channel. A keynote essay to accompany their keynote is available online here.

The keynote is divided into three sections: (I) The big picture, (II) Open education at a crossroads, and (III) Creating better futures. In their keynote Catherine and Laura issue a call to action and a framework for proceeding. After the conference I began to think it was possible to actually implement their call to action.

With Catherine and Laura’s encouragement I submitted a wild card proposal to the Open Education Global (OEGlobal) 2024 conference proposing a series of asynchronous online activities related to their call to action that I proposed take place during the weeks leading up the the OEGlobal 2024 conference in Brisbane. My proposal was accepted.

I devised a series of simple and fun activities which have launched in the OEGlobal 2024 Interaction Zone. There is an Introduction and a series of activities starting with Reimagining Open At The Crossroads Through Music.

Everyone is welcome to participate in these activities whether you are attending the OEGlobal 2024 conference or not.

The Reimagining Open at the Crossroads schedule of activities is:

Activity 1: Reimagining Open at the Crossroads Through Music October 14, 2024.

Activity 2: What if?, October 21, 2024.

Activity 3: Make Claims, October 28, 2024.

Activity 4: Pathways and Connections November 4, 2024. In person version will take place at the OEGlobal 2024 conference in Brisbane, Australia on Wednesday, November 13th from 10:30-11:30 am.

Activity 5: Pathway Sharing. Online and in-person pathway outputs from activity 4 are invited to be posted here in OEGlobal 2024 Connect. Making pathways visible makes it possible to connect with others who are following the same path, both those in person attending the conference and those participating virtually. Connections can be made simply by replying to a shared pathway, providing a link to your pathway, and identifying points of mutual interest.

Here is a short summary I wrote of the entire Reimagining Open At The Crossroads activity including some details from the in person Pathways and Connections activity in Brisbane. Huge thanks to everyone who participated.

AI, E-learning & Open Education

Yu-Lun Huang from National Yang Ming Chiao Tung University in Taiwan runs an e-learning movement project for the Ministry of Education in Taiwan. More than thirty universities in Taiwan participate in the project. One of the challenges they encounter is leveraging modern technology, like artificial intelligence, to improve digital learning or online education, especially learning effectiveness.

Yu-Lun's e-learning project hosted an E-learning and Open Education international conference on December 14, 2023 in Taiwan. Yu-Lun had read my AI From an Open Perspective post and kindly invited me to give an virtual keynote talk about AI for the conference and I accepted.

I titled my talk “AI, E-Learning and Open Education”.

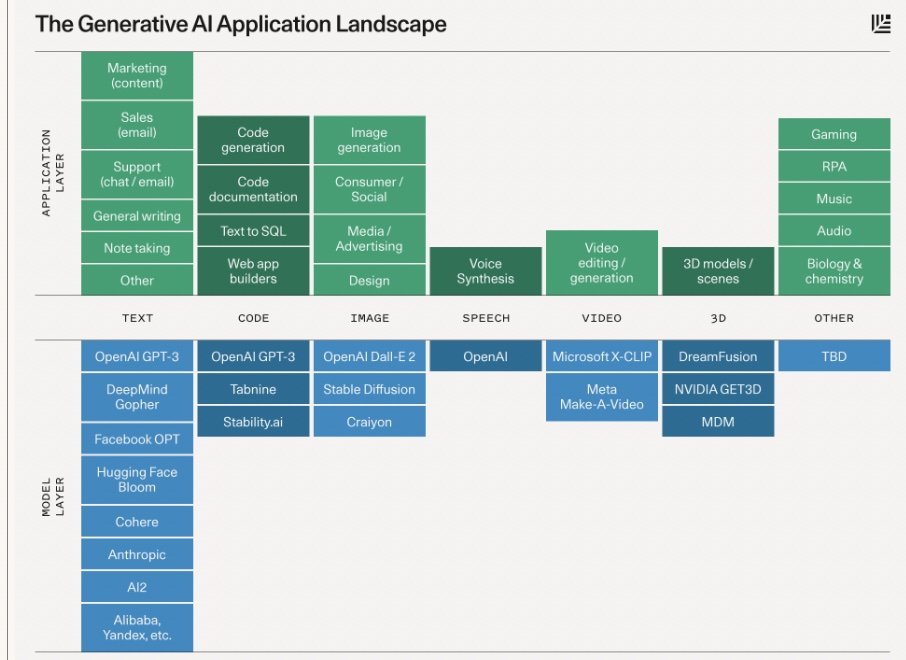

I used a diagram of the AI technology stack to connect AI to e-learning and open education.

For each layer of the stack I identify challenges and opportunities associated with AI in education. For example at the Data layer:

Yu-Lun asked me to focus on how AI can improve digital learning and learning effectiveness. Toward that end I describe AI pedagogical uses:

I provide a few examples of education AI applications and tools including those for pedagogy, AI for subject specific use (history, biology, math, music, …), and AI integrations into learning management systems.

I talk about AI digital literacy including the importance of prompts.

I talk about cheating and plagiarism.

I close my talk with a call to action suggesting actions educators in Taiwan (and elsewhere) can take to understand AI and use it in their teaching and learning practice.

And finally I concluded my talk with a recommendation specific to Taiwan.

Taiwan has a reputation as a smart, innovative nation and is already a world-leader in the area of semiconductors, information and communication technology (ICT) and manufacturing. These strengths position it well to build on and advance AI.

Taiwan came up with an AI strategy relatively early. Starting in 2017 it began making major investments in AI development. The Executive Yuan published a 4-year AI Action Plan with a budget of 38 billion NTD (1.1 billion EUR) and the Ministry of Science and Technology (MoST) published a 5-year AI Strategy with a budget of 16 billion NTD (490 million EUR). These initiatives were largely focused on economic matters including:

creating a national AI cloud service and high-speed computing platform

nurturing AI research service companies to form a regional AI innovation ecosystem

publishing open data

building AI innovation research centers to train AI specialists, invest in technological development, and expand the pool of AI talent

establishing an AI Robot Makerspace for innovative applications and integration of robotics software and hardware

encouraging AI start-ups including available start-up accelerators and incubators supported by multinationals such as Google, Microsoft and IBM

As a final recommendation I advocated for Taiwan to similarly invest in, align, and expand its strategy to include AI in education.

The talk was not recorded and I know it’s hard to get the full picture without accompanying narration but here is a link to my AI, E-Learning and Open Education slides. Many of the slides have links which are clickable in Slideshow view.

AI is changing fast so this is really a snapshot in time but I hope it is useful to educators in Taiwan and around the world. Big thanks to Yu-Lun for the invitation.

I enjoy dialogue about all things I post. Every post has a corresponding discussion forum on OEGlobal Connect. If you want to connect you’ll find me there. I welcome AI, E-Learning and Open Education discussion there.

AI, Creators and the Commons

On October 2nd, the day before the Creative Commons (CC) Global Summit in Mexico City began, OpenFuture and Creative Commons hosted an all day workshop on “AI, Creators and the Commons”.

The goal of this workshop, organized as a side event to the CC Summit, was to understand and explore the impact of generative machine learning (ML) on creative practices and the commons, and to the mission of Creative Commons in particular.

The workshop brought together members of the Creative Commons global network with expertise in copyright law, CC licenses as legal tools, and issues in generative AI, in order to develop an understanding of these issues, as they play out across different jurisdictions around the world.

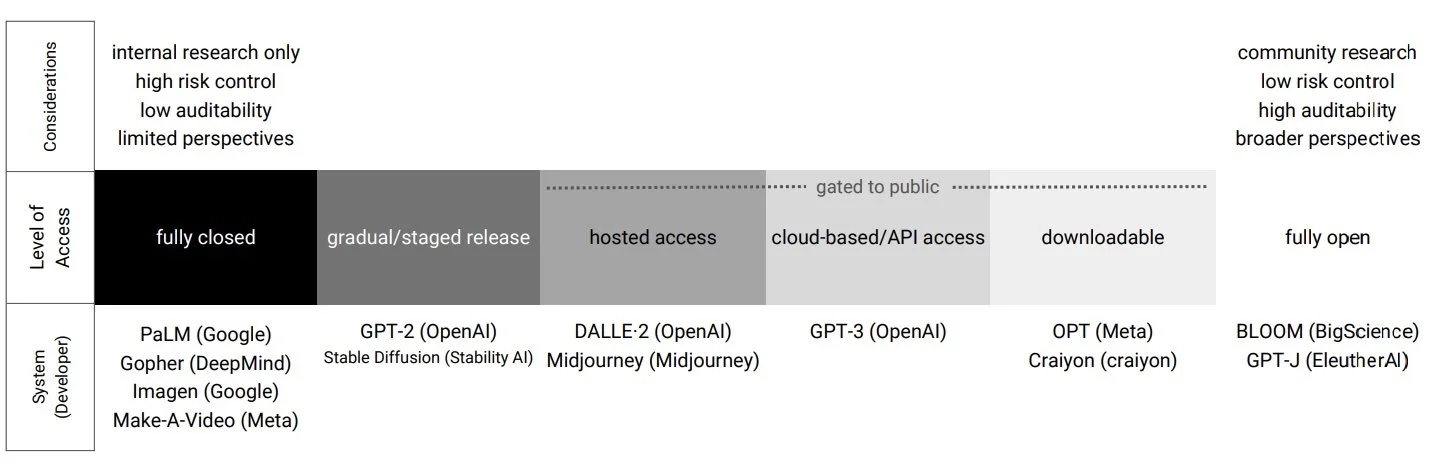

The workshop focused on the "input" side of generative AI particularly on the data used to train ML. The morning session focused on:

How do copyright systems around the world deal with the use of copyrighted works for training generative ML models?

The aim was to understand whether there are differences between jurisdictions that affect whether, and how copyright protected works (including CC licensed works) can be used for AI training. Questions asked included:

Are there differences between jurisdictions that affect whether, and how copyright protected works (including CC licensed works) can be used for AI training?

How do different legal frameworks deal with this issue and what balance do they strike?

What are the implications for creators?

What are the implications for using open licenses?

Here are a few of my takeaways and responses to those questions from this session.

In the USA two areas of copyright activity related to AI are copyright over AI outputs and copyright related to inputs used for AI training.

The legal case around the comic book Zaraya of the Dawn was used as an example of copyright related to AI outputs. Although originally granted full copyright that was subsequently revoked. Instead, the text as well as the selection, coordination, and arrangement of the work’s written and visual elements were granted copyright but the images in the work, generated by Midjourney, were not as they were deemed '“not the product of human authorship”.

A relationship between a creators use of AI technology and a creators use of photography related tools was made. In taking a shot a photographer engages in composition, timing, lighting, and setting. After taking a shot they engage in things like post editing, combining images, and final form. In photography copyright is assigned to the person who shoots or takes the shot. In what way is use of AI technology different?

Pertaining to issues related to copyright of inputs used for AI training, reference was made to the many class action legal cases that are underway contesting that use of copyrighted works to train AI constitutes copyright infringement.

The legal case around Getty Images suing AI art generator Stable Diffusion in the US for copyright infringement was used as an example. Getty has licensed its images and metadata to other AI art generators, but claims that Stability AI willfully scraped its images without permission. This claim is substantiated by Stable Diffusion recreating the Getty company’s watermark in some of its output images. This case is interesting for the way it attests copyright infringement but also manipulation of copyright data (the watermark).

Another example was the Authors Guild’s class action suit against OpenAI. This complaint draws attention to the fact that the plaintiffs’ books were downloaded from pirate ebook repositories and used to train ChatGPT. from which OpenAI expects to earn billions. The class action asserts that this threatens the role and livelihood of writers as a whole and seeks a settlement that gives authors choice and a reasonable licensing fee related to use of their copyrighted works.

US defendants in these cases are expected to argue that their use of these works to train their AI is allowed under “fair use”. From a copyright perspective Generative AI is particularly disruptive because outputs are not exactly similar to inputs. But a key question will be whether they are similar enough. Do they demonstrate high transformativity?

Some artists are suing based on principle. Some see AI competing with them unfairly. Some are concerned about AI replacing human labour. And still others see AI created works as a violation of their integrity and reputation.

Canada, Australia, New Zealand, Japan and the UK all have something called fair dealing which is similar to the US fair use. So in these jurisdictions AI use of copyrighted works are expected to argue fair dealing allows them to do what they are doing.

In addition to fair dealing, some jurisdictions have copyright exceptions that allow for text and data mining. Text and data mining is an automated process that analyzes massive amounts of text and data in digital form in order to discover new knowledge through patterns, trends and correlations. Initial efforts to establish text and data mining were done largely in the context of supporting research. Creators and general rights holders did not pay much attention to it. But now, with generative AI, text and data mining is affecting everyone. Text and data mining exceptions, where they exist, present another means by which AI tech companies can argue they are legally allowed to do what they do.

In 2016, Japan identified AI as one of the most important technological foundations for establishing a supersmart society they call Society 5.0. In 2017 they amended copyright legislation to allow text and data mining classifying the activities into four categories, (1) extraction, (2) comparison, (3) classification, or (4) other statistical analysis. The Japanese exception is regarded as the broadest text and data exception in the world because: (1) it applies to both commercial and noncommercial purposes; (2) it applies to any exploitation regardless of the rightholders reservations; (3) exploitation by any means is permitted; and (4) no lawful access is required.

Other countries such as Singapore, South Korea and Taiwan have adopted similar rules with the intention of removing uncertainties for their tech industries and positioning themselves in the AI race, unencumbered.

EU's Directive on Copyright in the Digital Single Market, adopted in 2019 introduced two text and data mining exceptions. One exception is for scientific research and cultural heritage with a caveat that use be non-commercial. The second is a more general purpose exception which allows commercial use as long as source data is lawfully accessed and creators have the option to opt out. This general purpose exception is seen as the one applicable to generative AI.

Opting out is seen as giving a creator leverage and the first step in securing a licensing deal. The opt-out requirement is seen as extremely difficult to implement at a practical level. At this time creators have no idea whether their works are being used by AI and AI tech players are not disclosing what data is being used to train their models. In some cases there may be multiple copies of works and there is no simple way of ensuring all copies of your work have been excluded. In addition it is not clear whether opt-out only applies to new uses or whether it is retroactive. How does opt out affect AI models that already include your work? Opt out is also seen as unfair to those who have passed away. They can't say no to their work being used by AI. Massive opt out may result in even greater bias being present in AI models.

There is a big challenge around ensuring opt out is respected. Early attempts to enable opt out such as those from ArtStation are seen as cumbersome difficult to enforce and placing a large part of the onus on the creator. Complex opt out systems will favour large players who are the biggest rights holders. Copyright holders are often not the creator themselves but large publishers or intermediaries who have acquired the rights to those works. Opt out needs to be simple enough that anyone can do it. There are some who don’t want opt out but instead a mandatory compensation.

An Africa perspective is one of being left behind and upending livelihoods. Big tech AI and transnationals have created a problem through their use of data sourced in Africa without collaboration or recognition of local communities. But what to do? The data is already taken.

Financing of AI research and use of data is all global north. AI is data colonization and a threat to sovereignty. Local languages in training AI are absent. Creators are facing realization that they can be replaced. Text and data mining was thought of as something for scientists and analyzing literature to get insights. Now there is a growing realization that text and data mining touches everyone. Does text and data mining, fair use / fair dealing really allow use of everything?

There is interest in creating a licensing market. But copyright is not and never has been a good or effective jobs program. Copyright has done a poor job of benefiting creators to generate a living. Copyright is wielded by a few big players to benefit a few. Want creators fairly rewarded and remunerated.

Kenyan workers cleaning AI data is unethical - not a copyright issue. Need to go beyond copyright and address labour issues.

Are we entering a knowledge renaissance? Or a desert where sharing is not allowed? Are countries taking a 21st century lens going to allow anything?

Latin America does not have copyright exceptions with a big enough scope to enable AI. They do not have fair use or text and data mining exceptions. Lack of these copyright exceptions is not really a current issue. Data privacy is the more pressing issue. Health data is an area of focus along with open science discussions on who owns data.

Renumeration tends to go not to the creator but to large rights holders. Desire to see creator right to renumeration against big platforms including but going beyond AI.

It is difficult for a country to figure out how to enter the AI field.

AI is data colonization. AI is extracting local community data and using it for commercial purposes. Local community data is communal not individual. It should not be used without local community permission. Western civilization notions like copyright are counter to traditional knowledge.

This session generated a lot of observations and questions for me:

Creators push for copyright because it is the only tool they have. What are other strategies?

Current copyright law is deficient in being able to handle all these AI issues. Ensuring an ethical, responsible and fair for all AI will require going beyond copyright law.

Data “mining” sounds like exploitation.

Rights holders want consent, credit, and compensation.

Opting out of something is different from opting in to something. What alternative to big tech AI can creators and AI users opt in to?

What is the commons? Are differentiations such as public domain, commons licensed, copyrighted still relevant? Is the entire Internet just a big commons database available for AI to freely scrape and use?

ML Training and Creators

This moderated group discussion after lunch focused on understanding the position of creators in relation to generative ML systems. What are the threats and opportunities for them? To what extent do creators have agency in determining how their work can or should be used? What tools (legal and/or technical) are available to creators to manage how their works can or should be used? How do CC licences fit into this, and is there a need for CC to adapt or expand the range of tools that it provides to creators?

Here are some of my points of learning and takeaways:

Law is slower than technology.

Creative Commons tools have reduced relevance in the context of generative AI. The way Creative Commons licenses involve attribution, giving back to the community, and creating a commons has been disrupted by AI. The original idea around Creative Commons was to give creators choice. How does Creative Commons support creator choice in the context of AI?

In the context of generative AI users are not just traditional creators but business enterprises, educators, biotech and health care. In what way are Creative Commons licenses useful to these new users?

Creative Commons has played a key historical role in the ethics of consent, expression of preferences, and norms around responsible use. In the context of AI how can CC continue with these roles? Perhaps there is a role around commons based models, commons based open outputs, and AI for the public good?

Generative AI represents a fundamental breaking of the reciprocity of the commons. It has spawned a lack of trust in copyright. AI needs to restore trust associated with data.

AI creates a different power structure. It breaks the social compact of the commons. Many rights holders have become overtly hostile to the commons. Opting out is in some ways an expression of “you can’t learn from me”, an undesirable outcome. One way some trust could be restored is if AI models were by default in the commons. This would restore some balance and giving back.

AI companies still don’t have a business model. The traditional big platform model of selling ads seems inappropriate. AI needs a model that does not give big tech disproportionate benefit.

We need a legal technical innovation (other than copyright) that builds a shared culture where we can all participate. We need a more global AI approach. We don’t have to be western to be modern. What we really need is guardianship not ownership. A means of connecting to one another across national boundaries. AI needs a set of principles and values shared across cultures. We need to give people agency back.

Creative Commons licensing is not a way to accomplish this. But, if it is not licensing then what?

ML Training and the Commons

This final session of the day moderated group discussion continued exploration of the relationship between generative ML and the commons. Questions posed included; How do generative ML models impact the commons? How important are the commons when it comes to ML training? How can we best manage the digital commons in the face of generative ML? How do traditional approaches to protecting the commons from appropriation, such as copyleft and share alike, interact with generative ML?

My note taking diminished this late in the day but here are a brief few points of interest that came up for me:

Creative Commons what are you going to do about AI?, is a question CC is hearing loud and clear.

Creative Commons is certainly about licenses, but not solely. CC is about reducing legal barriers to sharing and creativity. It’s not just about copyright, it’s about growing the commons.

CC tools should never overrule exceptions and limitations.

Copyright is not the best tool for resolving AI issues. CC licenses have held up well over time but other big issues have surfaced. What is legal vs what is not? What is right vs wrong? Calls for a code of ethics, community guidelines and social policies.

In creating new works AI could empower creators not betray them. AI’s unwillingness to cite sources and identify where data comes from is not helping.

Better sharing is sharing in the public interest.

AI could broaden the commons.

Is AI a wonder of the world?

Closing Thoughts

I found this day very thought provoking. This blog is by no means a complete comprehensive summary, merely things I took note of throughout the day.

Kudos to OpenFuture and Creative Commons for co-hosting this day and to all the participants for actively sharing their perspectives, experiences, and advice. I’m especially impressed with CC’s willingness to ask these hard questions and engage in self critique while at the same time actively seeking to define it’s position and role in generative AI and the commons going forward.

I appreciate being invited to this session and participating as a vocal active contributor. It helped develop a common understanding and generated observations, questions and discussion that carried forward into the follow-on three days of Creative Commons Global Summit.

I’m a strong advocate for being proactive in defining the future we want. As part of my participation in this workshop I mentioned being in a position to comment on and make recommendations related to Canada’s draft AI Act. I expressed interest in developing a shared collective position of principles, ideas and values that we could bring forward as part of an effort to shape AI legislation in all our respective countries right from the start.

I was thrilled when Paul Keller ran with this idea and over the ensuing three days of the Summit worked with a group of contributors to develop a statement on Making AI Work For Creators and the Commons.